Stop Data Theater: Use Altitude Maps

A guide to measuring the right data, connecting it to the work that matters, and changing data culture at your company

Hi there, it’s Adam. I started this newsletter to provide a no-bullshit, guided approach to solving some of the hardest problems for people and companies. That includes Growth, Product, company building and parenting while working. Subscribe and never miss an issue. If you’re a parent or parenting-curious I’ve got a podcast on fatherhood and startups - check out Startup Dad. Questions you’d like to see me answer? Ask them here.

Every company I work with or have observed struggles with metrics measurement. They measure too much that doesn’t matter at too fine a level of detail or they don’t measure much and certainly not the correct metrics given what the company needs to be successful. If you don’t have clarity on this and aren’t measuring the interconnectedness of the right metrics then you’re engaging in Data Theater. The challenge is finding the correct middle-ground — avoiding low-level metrics that don’t connect to what the company cares about, but nothing so high-level that we can't prove our work is making an impact.

Recently I was having a conversation about this with my friend and data powerhouse Crystal Widjaja. I asked her if she’d grace the digital pages of this newsletter with a guest post on the topic… and she agreed! She did not disappoint. And how could she have? I consider Crystal to be one of the absolute best people to tell you what matters in the world of measurement, how to evolve your data practices, and even conducting practical analysis. She is also generous with her knowledge, having created multiple courses on data and analytics for product managers.

In today’s newsletter she covers:

How to recognize when you’re performing Data Theater

Leveraging the Altitude Map to break free of poor data practices

A step-by-step guide (with a template!) to implementing Altitude Maps at your company

February 2024 marked 100 months since I first joined Gojek (a part of today’s GoTo group) as the first data hire. In my 5-year tenure, we went from 20,000 orders a day to 5,000,000 to a successful IPO in 2022. One of the most meaningful things we did along the way was an intentional pivot from data theater to data science. Data theater is the sin of using copious amounts of data in ways that make us feel smart and data-driven without actually impacting any real decision-making or core business metrics. [Editor note: it’s another example of Motion over Progress].

I cut my teeth on data at Gojek and have been helping startups and large public companies navigate the maze since. Beyond all of the tooling, this is the one foundational thing that makes or breaks any data-driven product strategy within a company: how you think about the “physics” of the business, how to track it quantitatively, and how to differentiate signal from noise quickly.

If you don’t have a strong understanding of the company’s ecosystem and a repeatable (see: sustainable) process to make data-driven product decisions, even the best-in-class data tooling inevitably turns OKR setting and product meetings into Data Theater.

In this post, I break down:

Data Theater: the most common symptoms that teams experience when it comes to data theater and their root causes.

Step-by-Step Process: the Altitude Map is my step-by-step process for how I think about how to model the metrics that matter, discern controllable vs uncontrollable inputs, and evolve both over time.

An Altitude Map template to help guide the process.

The Data Theater Problem

Every company looks at metrics to understand how the business is doing for some specific period of time — this is necessary, but insufficient. In product strategy, we tend to over index on goal-setting as the primary driver for success. We create ambitious goals with the intention that they will motivate our teams to achieve outstanding results.

One company I advised thought they didn’t have product-market-fit for their data platform aggregator product because very few users retained even after successfully connecting their data sources to the product. The hypothesis was that they had the wrong audience or needed a better product value proposition. The team iterated on marketing copy, target audiences, and adding more and more features to the product. But what I found was that while users were going through onboarding successfully, they weren’t experiencing the value proposition that the product promised because of a 16-hour delay from data being connected to actually showing up on the user’s dashboard. The remarketing efforts and new product features ‘worked’ but the problem-to-solve didn’t focus on the right inputs of the user experience and ended up being a waste of the team’s time.

This story is common—nearly every company has some form of yearly OKRs or KPIs with goals set for different teams in the organization. These metrics often devolve into data theater: serving as superficial justifications for feature prioritization or reduced to simple checklist items. In many cases, they are relegated to a single slide in a weekly meeting or, even worse, only revisited at the end of the year as an afterthought.

Most companies would probably say “we have the data, it’s just not really being used”. When teams say this, they are typically referring to one of a few common symptoms:

Disconnect between the org structure and physics of the business

Rigid focus on select low-level KPIs / metrics / features despite new insights

Lack of a repeatable, systematic approach to separate signal from noise

Symptom 1: Disconnect between the org structure and physics of the business

Understanding the physics of a business means having a deep, holistic grasp of how the organization creates value. It involves knowing the key inputs, processes, and outputs that drive successful outcomes for the business.

When data is used as a strategic lever rather than performing data theater, the org structure reflects the company's value creation process with teams and roles aligned around the most important drivers of success. This ensures that everyone is working towards the same overarching goals and resources are allocated to the areas that have the greatest impact.

Organizations that practice data theater aren’t grounded by the physics of the business—the core levers that drive growth, profitability and user value.

This leads to a number of problems:

Teams focus on metrics or initiatives that are not truly critical to the business leading to wasted effort and resources.

Silos emerge and different departments pursue conflicting or redundant goals.

And at its worst: The disconnect between the physics of the business and the org structure in data theater-driven companies is the perfect environment for a cultural pitfall. A culture where the team’s charisma, ability to sweet-talk and manage upwards becomes the primary determinant by which they receive resources and recognition, rather than the teams' actual contributions and criticality to the desired business outputs.

Symptom 2: Rigid focus on select low-level KPIs, metrics or features despite new insights

Teams performing data theater fall into the trap of fixating on a narrow set of low-level metrics or features even when new information suggests that these areas may not be the most critical drivers of success. Teams are assigned ownership of specific metrics and are quick to celebrate when those metrics improve even if the higher-level goals of their managers or the broader organization remain unchanged. They keep toiling away at their team-level metric, having committed to it for the year. Meanwhile, improvements aren’t yielding results at the company-level.

Teams continue to refine and optimize existing features, reporting vanity metrics that show increased adoption or engagement, without demonstrating a clear link to the more critical business objectives. Data is cherry-picked or presented in a way that "proves" the success of a feature or initiative, even if it didn't meaningfully impact the higher-level metrics it was intended to drive.

This may occur because the team lacks a clear understanding of how their work connects to the broader business goals or by intentionally omitting information that would invite further scrutiny. This type of data theater creates a false sense of progress and can lead organizations to continue investing in teams or initiatives that fail to deliver real value.

Symptom 3: Lack of a repeatable, systematic approach to separate signal from noise

In data-theater-led companies, the appearance of being data-driven takes precedence over the execution of generating actionable insights. This symptom often manifests as a lack of a repeatable, systematic approach to separating meaningful signals from the noise and normal variance in their metrics.

Teams go through the motions of tracking clickstream data and reviewing it during weekly meetings, but without a genuine commitment to understanding and acting on them simply because it's a required checklist in their product building workflow.

Leaders have knee-jerk reactions to yesterday’s short-term fluctuation in metrics, celebrating when they go up and sounding alarms when they go down, without a clear understanding of whether these changes are driven by normal variance or specific actions taken by the team.

The lack of an established framework for identifying key drivers and testing hypotheses leads to inconsistent and insufficiently actionable analysis. Teams become frustrated and eventually give up on the process altogether. Even when they find real insights and make improvements, the absence of a systematic approach makes it challenging to build upon these successes and drive continuous optimization.

The Solution: Avoid the Data Theater trap using Altitude Maps

Setting goals and looking at data are important activities. They’re necessary, but insufficient. In my experience, it’s the activities that surround the goal setting that actually drive growth and improvement. Rather than setting-and-forgetting product goals, we need to start by clearly identifying how our product work drives impact within the context of the broader company.

The Altitude Map is designed to address this challenge and it sets us up to tackle downstream problems by expanding our data capabilities. It’s a quantitative map of the product area we own—our altitude—that describes how our work drives impact for the organization and helps us identify the metrics we need to focus on to make that impact a reality. Altitude Maps organize, align and communicate the physics of our business. Knowing how the altitudes connect helps us understand how our individual feature solutions contribute to higher-level business outcomes across our teams, KPI goals, input levers, and product features all in one place.

The Altitude Map is also a repeatable process that I use with every company I advise. It enables product managers to go beyond simple goal-setting:

Define the altitudes: start by clearly articulating the high-level goals your manager is trying to achieve and how they’re measured (the Altitude Scorecard), your own Altitude Scorecard, and your Solution Altitude features.

Map the KPIs at each altitude: for each Altitude, identify the specific KPIs and metrics that best capture its performance. These metrics should be actionable, measurable, and directly tied to the objectives of the altitude above it.

Determine the input levers: identify the specific user actions, experiences, processes, or initiatives in the product that influences the outcomes of each KPI.

Establish a measurement framework: leverage a systematic process for regularly tracking and analyzing the KPIs at each altitude, prioritizing the input levers, and testing hypotheses for new input levers.

Continuously update: as the business evolves and new insights emerge, update the altitude map to ensure it remains relevant and effective. Promote the input levers that most strongly influence your KPIs and discard those that are disproven through testing.

As the name implies, it “maps” the evolving ecosystem of the business model, product goals, user experiences, and product features (solutions). A repeatable, systematic approach helps you avoid the pitfalls of data theater and ensures that product efforts are aligned with the key drivers of success for the business.

Let’s step through the different steps involved in creating your Altitude Map.

Step 1: Define the Altitude Space between you and your manager

Early in our careers we may land ourselves in PM roles where we’re explicitly told the clear boundaries of what features or products to work on. We may be given one or two metrics to own—a single altitude within the physics of the business—until our product area expands or evolves. At that point we have more choices and more autonomy across multiple levels and breadths of altitudes.

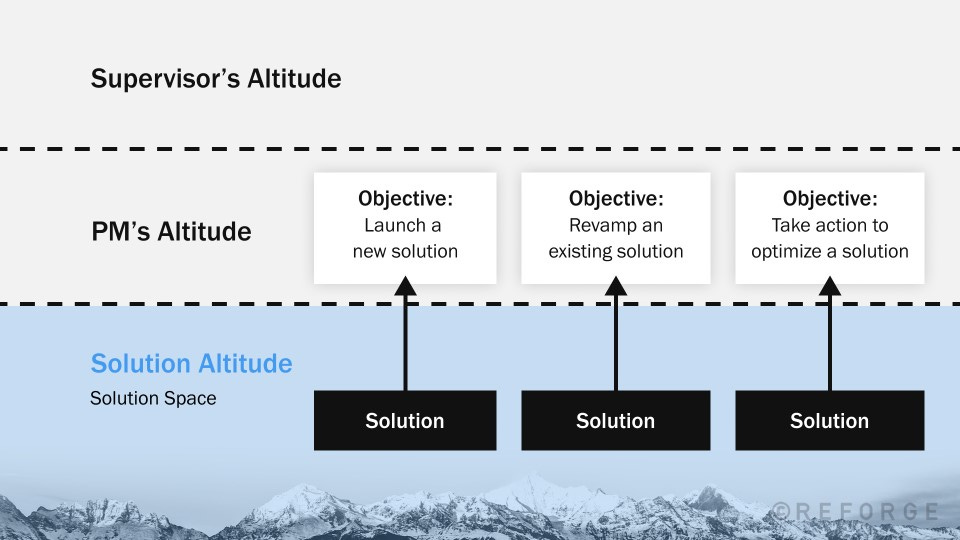

The exact number of altitudes at a company will vary, but for our purposes, it's most useful to define at least these three levels:

Our manager’s altitude. This is the level above us, and it includes the outcome metrics that our manager is most focused on influencing.

The altitude we own as a product manager / data scientist / product owner. This is the level where we sit and it includes the quantitative and qualitative description of our product area.

Our solution-space altitude. This is the level at which we’re implementing specific features in our product and managing day-to-day work.

These three altitude levels are the ideal starter-set of anchor points—avoiding low-level altitude metrics that don’t connect to what the company cares about, but nothing so high-level and unmeasurable that we can't prove our work is making an impact.

Beginning at the level above ours—our manager's level—is important because our manager is our primary audience and the person we work to support and present to most frequently. Understanding what they care about is critical to being able to tie our work to their priorities and get them invested in what we are doing. If we don’t start our altitude map by looking first at the key outcome metrics of our manager’s, there is a good chance we’ll work on things that don’t directly connect to either of our priorities. This is a worst-case scenario for career progression.

The key question we should consider at this point is: What metrics capture my manager’s main priorities in terms of the value they are expected to generate for the business?

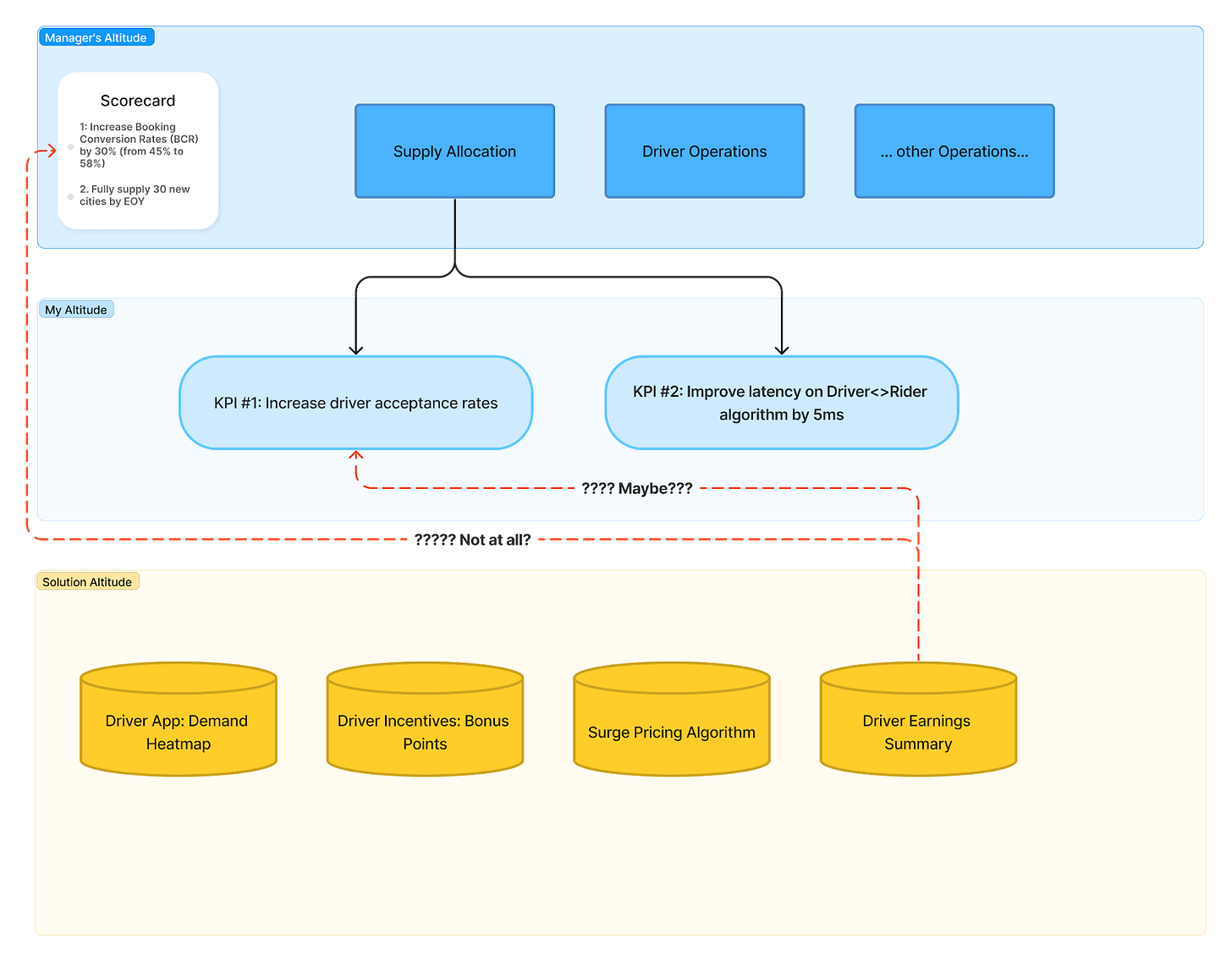

For example: if I am the Product Manager of Driver Operations at Gojek, a superapp in Indonesia, my manager is the head of Driver Operations with 2 KPIs:

Increase Booking Conversion Rates (BCR) by 30% (from 45% to 58%)

Fully supply 30 new cities by EOY

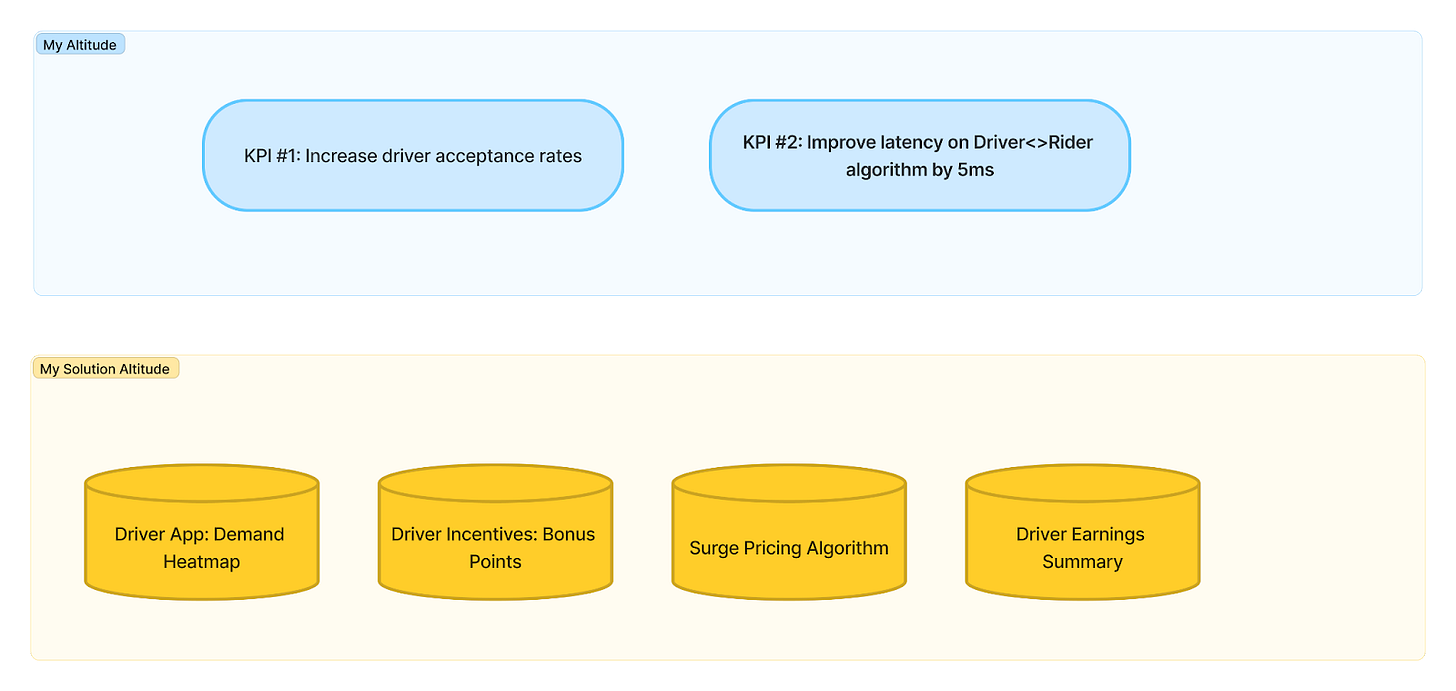

My altitude contains two key metrics: Increase driver acceptance rates and decrease latency of the algorithm that matches customer bookings with drivers.

Finally, my solution space contains all of the feature groups that I have ownership of in my role: the demand heatmap in the driver app, the incentives structure that rewards drivers for completing orders, the algorithm that determines where and how surge is priced, and a weekly earnings summary report shown to drivers in their app.

Step 2: Map the connections of your altitude scorecard

In an ideal situation, there is a constellation of measurable and interconnected KPIs we can trace from the top company-level altitude through our manager’s altitude, down to our own altitude scorecard metrics, to which each feature in our solution altitude has measurable impact.

Referring back to the Gojek example, my first KPI, “Increase driver acceptance rates”, has two attributes of a good altitude scorecard:

A clear connection to my manager’s altitude scorecard: “Increase Booking Conversion Rates by 30%”.

A logical connection to at least one feature in my solution altitude: the surge pricing algorithm that uses financial incentives to improve the desirability of an order to a driver.

At this step, we may find that some of the features in our solution altitude don’t have an obvious connection to our altitude’s scorecard or our manager’s altitude. As companies evolve, features may become redundant or less relevant to today’s product strategy. You may have inherited a relic of a feature that made sense in the past but no longer contributes to your altitude’s goals nor your manager’s altitude above you.

For example, the driver earnings summary screen in the driver app may have been a necessary feature before we integrated drivers with bank accounts, but no longer directly connects to our altitude’s scorecard or our manager’s scorecard.

This discovery is one of the most valuable outcomes of the altitude map exercise: some features in the product are more valuable than others as measured by their ability to connect to both your altitude and your manager’s altitude.

Zooming out even further we might conclude that the feature we own could be better leveraged by another team.

In a data-theater-driven company neglected features are often used as showcase spotlights because it’s easy to improve their adoption rates or vanity metrics and demonstrate that the team is being “productive.”

Using altitude maps explicitly prevents us from going off target by ensuring the metrics we report are logically grounded in the scorecard metrics—value we aim to generate for our users and for the business. If our altitude map can’t illustrate how improvements in a feature would drive success for our scorecard or our manager’s scorecard, then we shouldn’t be performing the data theater of showing off that feature’s metrics or improvements.

Step 3: Identify the inputs between your altitude space and your solutions

Inputs are the possible metrics, factors, and variables that impact our KPI. These inputs, when optimized, are possible “answers” to achieving our KPI goal and positively impact our manager’s scorecard KPIs.

One of our biggest data-related struggles as PMs is choosing the most high-leverage metrics to focus on. If we align ourselves to bad metrics we invest our time in bad bets that don’t add value. When we lack the right metrics we often can’t connect our outcomes to the priorities of our manager or to the overall business goals. As a result, we can’t demonstrate that our work drives meaningful business impact, which in turn means we’re more likely to perform data theater and show off vanity metrics. Our goal at this step is not to impress our executives with radical upward swings in relatively meaningless numbers. It’s to align our work to the input drivers of our manager’s altitude scorecard metrics.

At the other extreme, we can’t focus solely on solution-level metrics—the set of metrics that describe the performance of individual features (or bundles of features) that have minimal connection to anything that matters to our business. For example, a PM who recently launched a comment feature within their product may focus on metrics that describe the usage of that comment feature, only to later realize that usage of this feature doesn’t drive anything meaningful for their altitude metrics like retention or monetization.

Instead, having grounded ourselves in our Altitude Map’s connections in the previous step, what we need to do next is to identify the inputs to our altitude’s KPIs one by one.

Here’s a thought experiment: List as many orange items as you can think of in 10 seconds. Now, list as many orange items as you can think of in a construction site. Typically, this narrower, concrete space (pun intended) increases how many items we come up with.

Think of each KPI in our altitude as the equivalent to the “construction site” sandbox that facilitates the input ideation process of the altitude map: the search for potential inputs and possibilities in an efficient way.

And guess what — because the altitude map’s value comes primarily from the process, it doesn’t really matter which KPI you start with first. If you have a dozen KPIs I suggest starting with the one that you:

Already spent a lot of time thinking about (you know its basic inputs well)

Suspect has actionable (controllable) inputs that you have yet to discover

I start by identifying the user-facing product experiences that correspond with the KPI. It is important to not just identify the specific screen of the product or the funnel, but the context of the experience that might influence the KPI in one of four ways:

What increases the absolute # of the metric?

What decreases the absolute # of the metric?

What increases the relative % change?

What decreases the relative % change?

Tip 1: Map out the product experiences related to the KPI

Start by identifying the specific product screens, funnels, or user flows that are directly related to the subgoal. Consider the context of these experiences and how they might influence user behavior. If the KPI is the “number of users who book a ride”, relevant in-product experiences could be:

Number of available drivers displayed on the map

The pickup ETA

How long it takes the screen to load

Tip 2: Consider the "last event" scenario

Imagine that any tracked event could be the last interaction a user has with your product. What would you want to know about this final experience? This thought exercise can help you identify critical inputs that may be causing users to abandon the product. For instance, did the user encounter a "No Results Found" message after searching for a contact? Did they experience an error while adding a new payment method?

Tip 3: Ask yourself what needs to be true for the user to convert on this subgoal?

If a user doesn’t have 10 friends, it’s impossible for them to convert on Facebook’s famous “10 friends in 7 days”. Therefore, an input to this KPI could be “number of friends a user has.” It’s fine to include inputs that aren’t perfectly quantifiable—proxy metrics can be created or tracked if deemed necessary in the next step of the altitude map.

For our Gojek example, we might start with our product experience flow from passengers placing orders to order acceptance:

Next, I might want to directly break down the inputs to the Driver Acceptance KPI:

These become additional “sandboxes” to expand my input exercise. At this point, it’s tempting to practice data theater by jumping straight into each sandbox to generate another set of analyses, but the simplest restraint mechanism is to size the input factors at each stage with a single measurement: what % of our KPI falls within each of these conditions?

Here we’d see that the largest driver (pun intended) of low driver acceptance rates come from 1) ignored bids and 2) rejected bids. This gives me enough reason to explore the rabbit hole of these two inputs further: what types of orders are rejected versus ignored versus accepted?

Most teams don't get to this level of segmentation because they are only tracking "completed orders"—an outcome metric attached to a goal—versus an analytical data point such as “how many other opportunities does the driver have at the time of receiving this order?” Remember: the best input metrics measure what the user actually experiences. The most successful PMs see their job as one of discovery: what are the inputs that affect my user’s experience (and the success of the product) and to what extent can I leverage them?

For example, further breaking down the input map for our Gojek example, we found:

Bookings that were not converted due to drivers ignoring the bids tend to have longer trip distances compared to completed bookings, suggesting that drivers are more likely to ignore orders with long distances.

Ignored bids exhibit patterns within specific time windows, which varies depending on the service area. For instance, in Northern Jakarta, most GoRide bids are ignored during lunchtime, whereas in Southern Jakarta, bids are mostly ignored during the evening rush hour.

Run out of inputs to add to your map? This is common. I find that most products don’t have real levers built in that truly move the needle on the goals the business cares about and it’s important to internalize this realization. The altitude map process results in an objective model of our product’s input levers (or lack thereof) which no amount of data theater can paper over.

Data can no longer be cherry-picked or presented in a way that "proves" the success of a feature or initiative, unless it meaningfully impacts the higher-level metrics it was intended to drive. Ultimately, this type of data theater creates a false sense of progress and leads teams to continue investing in input metrics or features that fail to deliver real value instead of what they should be doing: identifying and building new levers.

Step 4: Discovering new levers & solutions

One of the main challenges product teams face is determining which metrics to focus on and how to effectively evaluate the success of individual feature solutions or bundles of features. Without a clear understanding of the most important metrics it becomes difficult to iterate on work and decide on the next steps.

The altitude map aims to address this issue by stack ranking a set of actionable key input variables that teams can use to measure progress and guide their efforts. More mature products typically have tons of different input metrics associated with each KPI which can lead to analysis paralysis and encourage data theater behavior. You’ll see this where teams simply present the input metric that showed the most improvement.

Overcoming this last data theater challenge requires the final step in the altitude map process—a set of standard, objective measurements of the relationship between each input metric and KPI outcome.

The first powerful tool for this purpose is correlation analysis. Correlation (the =CORREL function in Gsheets) measures the statistical relationship between two variables, in this case, an input metric and a KPI. To calculate correlation in Google Sheets, simply have data for the input metric (e.g. the distance of an order) and the KPI output metric (e.g. driver acceptance rate) in two separate columns and use the =CORREL(array1, array2) function to calculate R between the two data sets. Any company and PM can do this. The resulting correlation coefficient ranges from -1 to +1, where:

A value of +1 indicates a perfect positive correlation (as the input lever increases, the KPI increases proportionally)

A value of -1 indicates a perfect negative correlation (as the input lever increases, the KPI decreases proportionally)

A value of 0 indicates no correlation between the two variables

However, it is important to remember that correlation does not necessarily imply causation. A strong correlation between an input lever and a KPI suggests a relationship, but it does not guarantee that changes to the input lever will directly cause changes in the KPI. This is why it is crucial to use correlation analysis as a starting point for hypothesis testing and experimentation rather than relying on it as the sole basis for decision-making. Some correlations may be spurious or influenced by confounding variables so it should simply be used as one of a few objective standard measurement methods in the altitude map process.

The second method involves conducting experiments and observing their outcomes while the third requires investigating unexpected and unexplained fluctuations in the data which Cedric Chin at CommonCog has a great, in-depth blog post on but would be too much to cover in this article. Essentially, the goal is to identify the extent to which we can influence each input and to systematically tune the input to increase their leverage.

For an altitude map to be a truly actionable, data-theater-absolving resource requires this repeatable process of qualifying inputs, testing them, measuring their fluctuations, and “promoting” discovered inputs to the altitude map as they are proven; discarding other inputs that become irrelevant over time.

Putting it all together

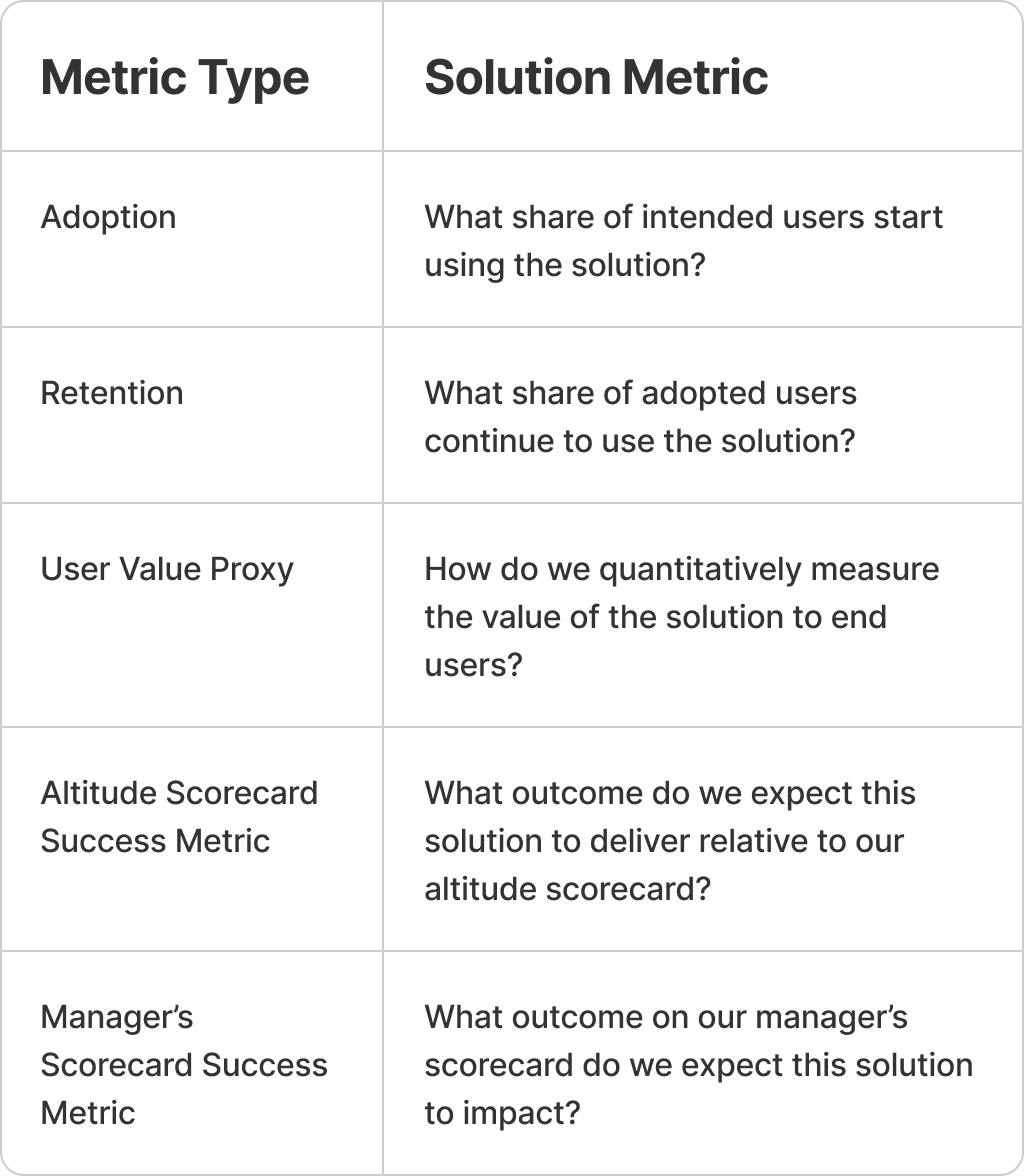

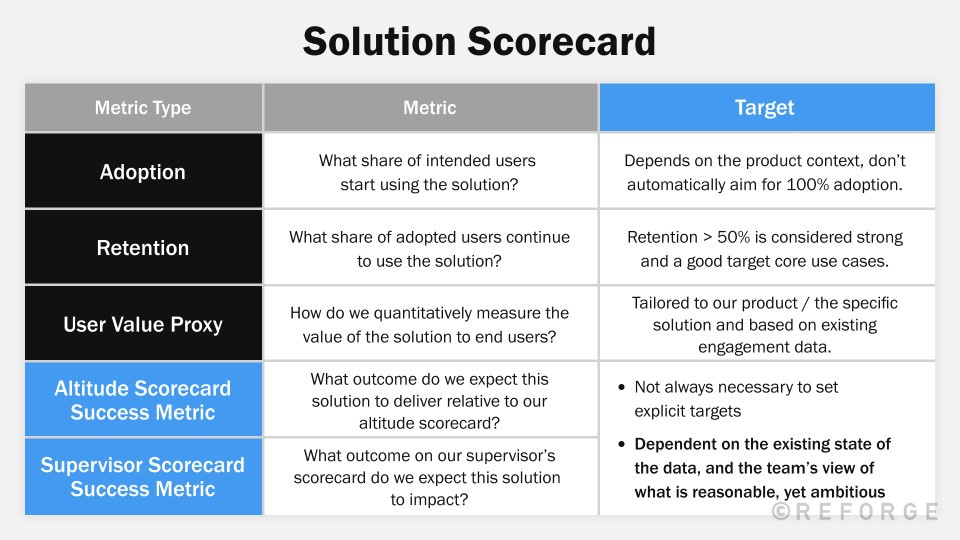

The final piece in our altitude map is the solution scorecard; an index of the features we’re building and an assessment of their value as it relates to our altitude metrics. This transforms intuition-driven “bets” into data-backed decisions that directly ladder up to our (and our manager’s) altitude.

As PMs, we’re primarily tasked with building solutions—usually features, or bundles of features—so we can drive impact in our product area. This means we also need to be able to answer the following questions about the solutions that we’re actively working on: how do the solutions we’re building move the needle on our key altitude metrics? Are the solutions driving impact, and if so, how much? Can we use the data available to us to improve them? And are we using the data available to keep us looking forward so that we’re consistently making smart, timely decisions on what to work on next and when to get started?

In practice, poorly defined bets most commonly take one of two forms. The first is building a feature or product with no obvious target user, while the second is continuing to iterate on a feature or product that has likely already reached its maximum impact.

For example, in GoRide, we found that showing lower driver pickup estimated-time-of-arrival (ETAs) correlated with higher driver acceptance rates. However, we also discovered a local maximum: an ETA of 4 minutes had the same acceptance rates as the faster zero to three minute ETAs. Once we reached an ETA of approximately 4 minutes for every ride, the opportunity potential for this input metric was exhausted and did not provide further leverage to Driver Acceptance Rates.

If we had been susceptible to data theater we might have continued optimizing for ETAs in our solution altitude space, striving to bring them down even further. However, this would have ultimately failed to have any real impact on the higher-level metrics of our altitude scorecard as we had already reached the point of diminishing returns for this particular input metric.

The solution: The Solution Scorecard (pun intended)

Mapping our solution space and building qualitative solution descriptions ensure that we commit to solutions that impact our desired altitudes. Your altitude scorecard is a statement of the world as it exists, while a solution scorecard is a statement of intent of how you are going to move something on your altitude scorecard.

It’s important to note that in many cases you won’t be able to definitively prove that your solution is driving your manager’s scorecard success metric. This is because there are inevitably other solutions that impact that outcome metric. However, it’s still important to try, because it allows you to compare how your different solutions drive the impact you ultimately care about—the impact on your manager’s scorecard.

Additionally, a target is highly dependent on the existing state of the data and what the team would consider to be a reasonable, yet appropriately ambitious, target.

In this case, we might instead set a reasonable floor—the bare minimum result we’d need to see for us to believe it had been worth the work.

Wrapping it all up

Altitude maps are a powerful tool (and process) to help you avoid data theater and better connect your work to what matters for the company. It’s a process I have implemented with many, many companies and you can leverage this template to make it easier to implement at your company. Despite the power of altitude maps they are only one potential tool that you can use as a product manager. If you want to learn about even more tools you can check out my full “Data for Product Managers” program on Reforge.

Until then, if you want to avoid data theater, model the metrics that matter, and discern controllable vs. uncontrollable inputs then I suggest you get started with altitude maps today.

Cristal is definitely an OG!

To me, this guide seems like an approach to growth from first principles. And while many articles try to describe anecdotal experiences of other folks in a teaching-by-example way, this one presents the first framework that can help people incorporate first principle thinking into actionable steps.

It's incredibly powerful. Congratulations to both of you!