Dissecting the Growth Strategy of ChatGPT

It’s more than a novelty effect - a deeper dive on acquisition, retention and monetization.

Hi there, it’s Adam. I started this newsletter to provide a no-bullshit, guided approach to solving some of the hardest problems for people and companies. That includes Growth, Product, company building and parenting while working. Subscribe and never miss an issue. If you’re a parent or parenting-curious I’ve got a podcast on fatherhood and startups - check out Startup Dad. Questions you’d like to see me answer? Ask them here.

Q: How did ChatGPT grow so fast and why does it keep growing?

When I worked at Kodak many moons ago, part of the company used to talk about the battle over the “speeds and feeds” of printers. How many dots-per-inch? How many pages per minute? Every year at the Consumer Electronics Show this was the conversation.

There was just one problem with this: no one cared.

I’m not in the prediction business but I do wonder if the AI model wars are headed in the same direction—not bankruptcy like Kodak, but an LLM sophistication arms race. About a week ago that race got some more fuel. Anthropic, makers of Claude, announced a new version (actually three new versions of the Claude LLM). So did Mistral a week prior to that.

Have we entered the era of speeds and feeds for LLMs?

You tell me:

The reality, as the Technically Newsletter put it so eloquently: it was never about LLM performance. What “it” is the author referring to? The experience of using these new-fangled AI tools.

The LLM one upmanship made me wonder why ChatGPT has done so well. Why was it the “fastest company to 100M weekly active users.” Okay, sorry, aside from Threads.

So I started researching, thinking clearly there must be extensive writing on this topic. All of the articles I could find boiled down to some form of:

Timing.

It’s novel.

It’s got a community.

It’s broadly applicable and useful.

Something, something first mover advantage, something something.

Ok, and also water is wet. I’m dissatisfied with those reasons. I’m especially dissatisfied because aside from first mover advantage all the other LLMs are also those things. And for a lot of technology you don’t actually want to be the first mover.

As an alternative to waving our arms and shouting “it’s the novelty effect!!!” today’s newsletter provides a case study on the meteoric rise of ChatGPT through the three growth levers that matter to a product: acquisition, retention and monetization.

Acquisition

First, let’s establish the most important acquisition loops that exist for ChatGPT. Many of these also exist for other interfaces but there are distinct differences that make ChatGPT more finely tuned for growth.

User Generated, User Distributed Content

This is the initial (and primary) way that ChatGPT grew when it launched. People took screenshots of their prompts and the ChatGPT output and posted it to social media. There are an almost infinite number of examples of this - and even a Twitter handle called ChatGPT Prompts. Sadly, it has few followers. It doesn’t matter though, Google has 81.4 million listings for “ChatGPT Prompts.”

No social media (or search) feed was immune; the quest for clickbait knows no limits. It permeated tweet threads, LinkedIn carousels, Threads… threads, TikTok, maybe Facebook (although who goes on FB anymore?), Quora, Reddit, news articles, etc.

This is actually one of my issues with the articles comparing the rise of ChatGPT to something like Instagram. You know the headlines: “It took 2 months for ChatGPT to get to X users and it took Instagram 2 years.”

The reason ChatGPT grew so much faster than something like Instagram is because it grew on the back of all of these social platforms that already existed! You know what Instagram couldn’t leverage when it launched?! Instagram.

The screenshot-and-public-share mechanism only works for so long—people get bored and algorithms start to downrank all those mind blowing prompts. This is somewhat of a novelty effect. And it loses its luster fairly quickly, after a few weeks or months, until someone figures out a new prompt that captures the imagination of the internet for another period of time. You saw this with DALL-E and the “Make it more…” phenomenon with image generation.

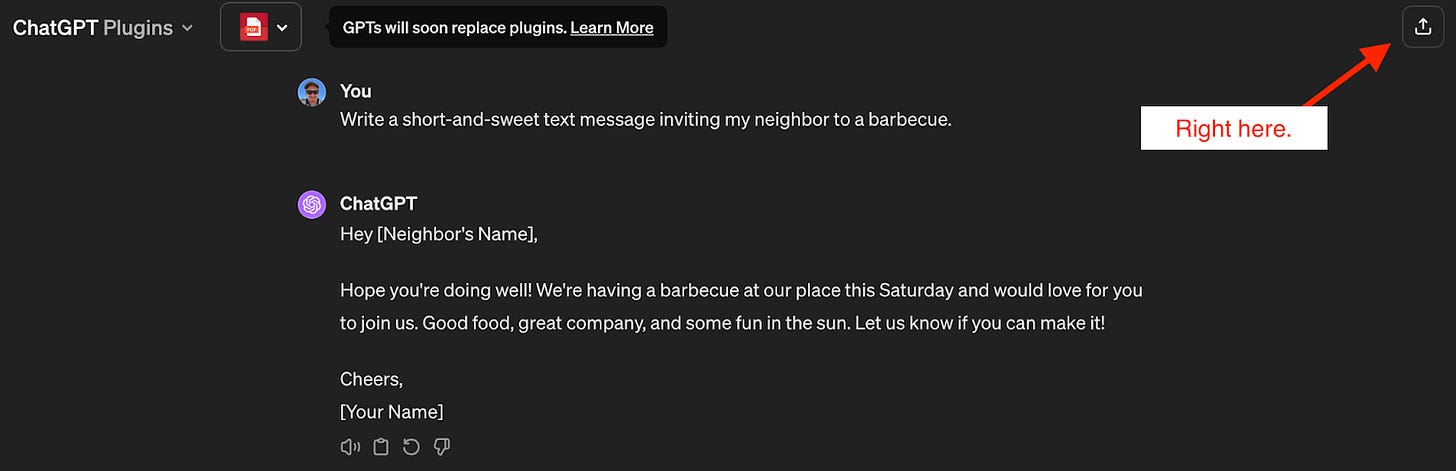

ChatGPT didn’t just rely on the goodwill of users though. They also leverage a built-in sharing mechanism in the upper-right corner of the interface.

Seems like anyone could (and should) copy this mechanism, but they haven’t. Here’s Claude 3. As impressive as the performance of that new LLM is, where’s the sharing action?

Perplexity has gotten this right, but there’s too much going on. Do I copy the link or do I share? And if I share, why are there so many choices? Also, does anyone share to Facebook anymore?

Custom GPTs Introduce Company Distributed Content

Every new feature release for ChatGPT contains a new way for people to share. Custom GPTs, which launched in November of ‘23, is a perfect example.

Need a math tutor for your kids? Sure, let me share that with you.

The introduction of Custom GPTs gave people another type of content that they could share with their friends, like the math tutor above. But it also introduced a new vector: publishing publicly.

And what does publishing a Custom GPT publicly do? It allows it to be indexed by Google. Just like that we have added a User Generated Company Distributed content loop to the mix.

This loop has a slower ramp time because it takes Google a while to decide this is important, but the presence of the GPT Store as a crawlable index of all public GPTs is a good starting point. This also has an impact on retention which I’ll discuss next.

Invites via Team Plans

In one of my most popular guest posts, Lauryn Motamedi talks about the importance of invites and multiplayer interactions for productivity tools. She would know having worked at Dropbox, Airtable and now Notion.

Do you know what the most transformative productivity tool of recent years is? That’s right, ChatGPT.

It also happens to be one of the only LLMs that easily allows forteam accounts (as of January 2024) and Enterprise accounts a few months prior to that. If I’m adding a team member what do I do? I invite them to ChatGPT.

So those are three acquisition loops that started (and continue) the rapid ascent of the consumer version of ChatGPT. For the enterprise side they have a robust sales loop with lead generation powered somewhat by their consumer growth.

Bringing people to the app to sign up is just one small piece of the puzzle. With so much competition from quickly commoditizing LLMs how do you effectively win over and retain your customers?

Retention

Fans of this newsletter and various other podcasts that have been kind enough to have me on will know that I care a lot about onboarding experiences. A great onboarding experience paves the road for healthy, long-term retention and as I’ve said before: it’s the only part of your product that 100% of users are guaranteed to experience.

So let’s do some onboarding comparisons between ChatGPT and other, competitive LLMs.

Here are the first screens for Perplexity, Mistral, Claude, and Pi. All competitors to ChatGPT.

One thing you’ll notice is that three out of the four all require login / account creation or some other form of navigation and setup prior to engaging with the AI. Perplexity doesn’t, but they’ve got at least two places suggesting I should. Mistral doesn’t even bother telling you why you might want to use Le Chat. No context surrounding that signup request at all.

ChatGPT also requires an account… most of the time. I’ve managed to find times when it doesn’t and looks like a streamlined version of Perplexity (maybe they’re running an experiment?).

Pi, which is supposed to be more friendly and emotionally intelligent, has 6 setup and information screens as part of onboarding before you get to using the product. This might work to their advantage but I’d be willing to bet that they lose some percentage of people at each step which means the initial interaction with the AI would need to lead to significantly higher retention to justify the loss. I’m not sure it does.

So not much difference between ChatGPT and the rest of the AI interfaces as far as the onboarding experience – on par with Claude, slightly more friction than Perplexity, and less than Mistral and Pi.

Sidebar

Let’s assume that onboarding had no impact on eventual retention (which we already know isn’t true, but play along with me for a few minutes here).

The majority of chat interfaces on top of LLMs don’t have or allow for cross-conversation context. I understand this both from a computational efficiency standpoint and a safety/security/privacy one. But how each chat interface treats historical conversations is markedly different and the ease in which you can access that information is (in my opinion) a key driver of retention.

With Claude, I have to navigate back to a home screen to see past conversation prompts.

I can’t access them from a new chat:

When I do find my way back to the home screen there are only a handful of past conversations easily accessible from the UI.

Failure to provide easy access to past conversations seems like a missed opportunity to me. And most others don’t make this easy either – Gemini buries it behind a hidden menu, Perplexity makes the library so small that I have to click on it to see more, Pi doesn’t have chat history but starts to lose context after enough back-and-forth, etc.

None of these are what I actually want as an end user which is easy access to past conversations.

Not only does ChatGPT provide a very readable set of my historical conversations. But it also organizes them automatically by recent, 7 days, 30 days, months, and past years, etc. And again, they have the sharing action built right into the sidebar.

Here’s a reason I keep coming back to ChatGPT – I leverage the same conversations over again to simplify prompts. As an example, I use ChatGPT to help me generate sample copy for the episode introductions to Startup Dad. By linking to a past transcript I can ask ChatGPT to provide me with a structured summary and I’ve trained it on the way that I like to do my introductions. So the next time I need to use it I easily navigate to that past conversation, give it a new link, and say “Do the same thing for this link: [link].” Works like a charm and keeps me coming back.

GPT Customization and Custom GPTs

I’m going to share two customizations that make ChatGPT more retentive and don’t exist with their competitors.

The first is GPT customization via custom instructions.

You can think about this the way you’d think about your favorite restaurant or that local coffee shop that knows your order before you step up to the register. They know your preferences, how you like that latte with an extra shot of espresso, or whether you’d like your GPT responses to apologize and remind you that they’re an AI (or not).

Custom instructions make ChatGPT more retentive for two primary reasons:

Creating them makes you more invested.

It allows for a more personalized experience.

Just like easily revisiting past prompts from the sidebar once you’ve turned ChatGPT into your neighborhood barista, why would you go get coffee anywhere else?

The second customization is via Custom GPTs. You can think of Custom GPTs like you’d think of templates.

One of the challenges with a completely horizontal product like ChatGPT and other LLMs is that it can be difficult to get started or know what to ask. It’s why most of them include some sort of prompt examples in the interface:

CustomGPTs take this several steps further because they tune ChatGPT to serve a specific purpose. If you need a math tutor, they’ve got you covered. If you need a GPT therapist, they’ve got dozens. Need a writing coach? Check.

Not only is this incredibly useful in onboarding to provide more concrete examples for ways you can leverage a very horizontal product but because the “GPT Store” is constantly being refreshed it provides reasons to come back and check out new ways of engaging.

Now that we’ve established a few of the core ways that ChatGPT provides a more retentive experience – onboarding (kind of), the sidebar, and customization. Let’s look at how they cash in on a quickly-expanding user base.

Monetization

None of this would work without effective monetization (and awareness of that monetization).

There are two primary ways that ChatGPT monetizes - a standard SaaS pricing model (freemium, prosumer, teams and enterprise tiers) and via developer access to its API. I’m focused on the consumer use case today though I realize a large portion of OpenAI revenue comes from developer access to their LLM.

Much has been discussed about how LLMs can use your content as training data for their models, so I’m pleased to see that ChatGPT effectively uses that scare tactic to their advantage:

The stat reflected in this screenshot also means that OpenAI has this information to inform their outbound sales outreach strategy for enterprise. It’s a great bridge from PLG to PLS and one that a high acquiring and high retaining free product enables for them.

You don’t see anything nearly this fully-formed from the other LLMs. You’d be hard-pressed to find a SaaS-style pricing page on any of the other LLMs. Many have a standard $20/month tier for “pro” access, but Claude doesn’t even show that until I’m at the point of payment (one step beyond the upgrade screen!).

So ChatGPT is winning here on monetization options, a freemium model and monetization awareness.

Wrapping it Up

Some might say that my comparisons are unfair—different LLMs have different goals, not everything needs to be ChatGPT, and many of these are in different stages of development.

I get all of that.

Also, we’re trending towards commoditization of the underlying model and as with most things eventually it’s the growth levers that matter. And in that respect I see ChatGPT winning on just about every dimension of consumer, PLG and enterprise growth.

Those articles about novelty and community may have been right in the earliest post-launch days to create early awareness. But not so much anymore.

So what are the implications of this for your products? Here are a few:

Speeds and feeds are an academic exercise. The “scores” of an LLM have a certain logical appeal, but that doesn’t do much for the consumer if they don’t care or can’t see the differences. In a commodity space the acquisition, retention and monetization levers in your product will be what propels it to long-term success.

Get people to value quickly and make it easy for them to re-engage with that value. The sidebar and customization options make it easy to form habits and introduce slight switching costs once the user is invested.

Don’t shy away from asking for payment. ChatGPT set the price by coming out the gate at $20/month. Then they set the team price and they’re doing the same with enterprise. They’re clear that it costs money to unlock more value when almost none of their competitors are. If you’ve got the acquisition and retention levers nailed, don’t skimp on monetizing.

Re: logging in, I believe openai are making their older models free to access without a login wall. This might explain why sometimes the login screen is there and sometimes it isn't.

Hi Adam, I sure appreciate this. I simply had the feeling when using ChatGPT that I was in relatively competent hands, as a user. It's nice that you've broken down why. If the first rule of first-mover advantage is, "Don't eff it up," ChatGPT is doing that.